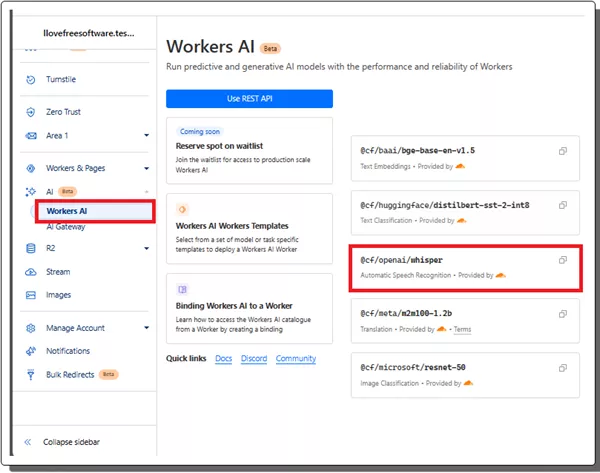

Cloudflare now has a new beta tool called Workers AI. You can now run predictive and generative AI models with the performance and reliability of Cloudflare Workers. They have added support for some of the popular AI models these such as Llama and Whisper among others. You can deploy these models in cloud using Workers AI and then use them over Rest API. In this post, I will show you how deploy Whisper as API using Cloudflare for free.

Whisper is a very powerful speech to text model from Open AI. It takes an audio file form you and then returns the transcribed text. We have covered its desktop apps, web assembly app, and even the web apps. There are many uses of Whisper apart from speech to text. It can even be used to generate subtitles of a video and can also translate them in various languages.

Now using Cloudflare, you can deploy an instance of Whisper model in cloud for free. And in the next section, you will see a step-by-step guide explaining how to do it.

How to Deploy Whisper as API using Cloudflare for free?

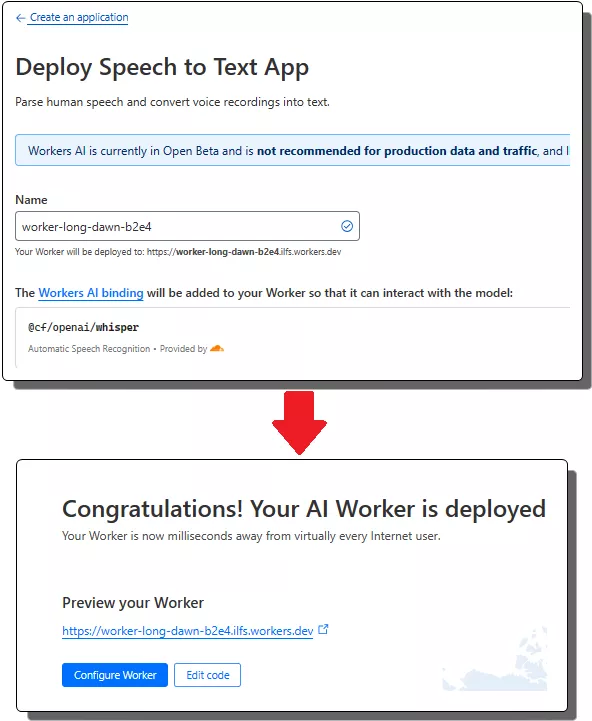

To deploy your own instance of Whisper on Cloudflare, you just log into Dashboard and then go to the Worker AI section. From here find the speech to text model and deploy it.

Once the model has been deployed, you will get its URL. Copy it down and save it somewhere safe.

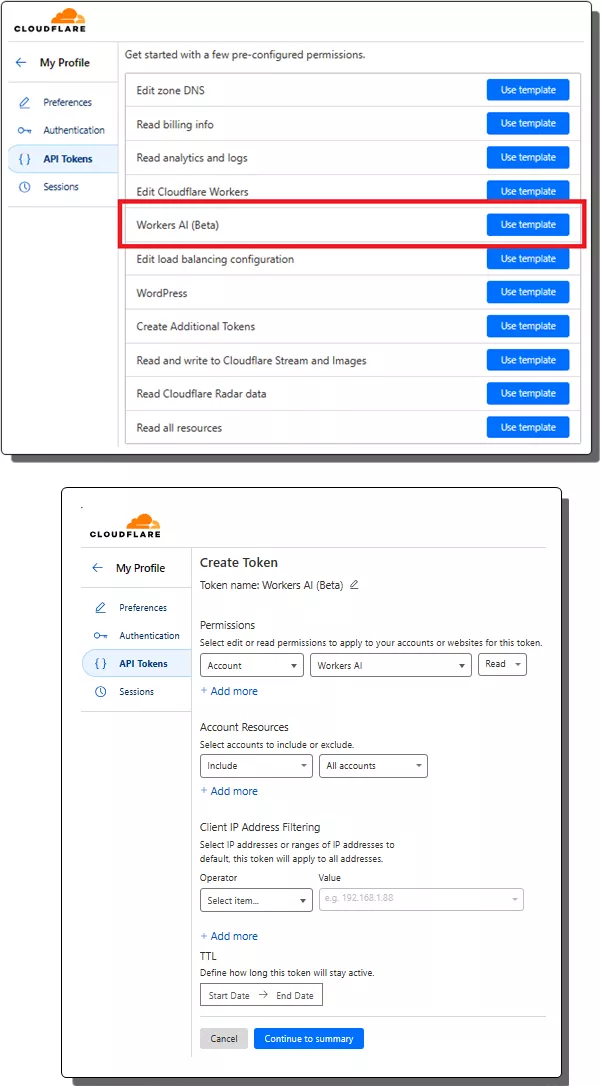

Now go to Cloudflare API section and the create a token. Select Worker AI template and then proceed with the default values.

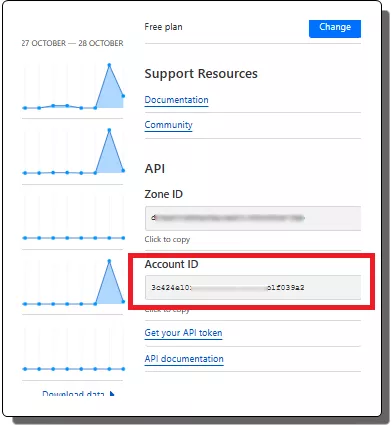

From the site’s dashboard in Cloudflare, you also need to copy your account ID.

Now if everything goes right then you are ready to make API calls to the Whisper model that you have deployed. I would suggest you use cURL or there are Postman alternatives out there which you can use. Also, recently we wrote about an Httpie client that can also be used here.

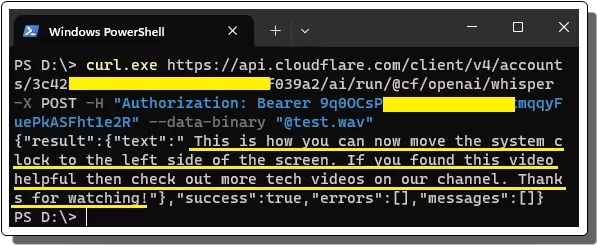

curl.exe https://api.cloudflare.com/client/v4/accounts/Account_ID/ai/run/@cf/openai/whisper -X POST -H "Authorization: Bearer API_Token" --data-binary @test.wav

Make the API call with the MP3 file or WAV file. And see the response that it returns. There is a “text” variable in the JSON response which contains the final extracted/transcribed text from the Whisper. This is as simple as that.

In this way, you can now deploy your own instance of Whisper on Cloudflare. Workers AI is great and it’s really good news that they have also released a free tier. I liked it so much and the fact that there are other AI models available that you can use such as Meta’s Llama. You can choose any of these models and deploy them on Cloudflare in a few clicks. Use them as APIs and do whatever you want.

Closing thoughts:

If you are looking for an option to host Whisper as API, then Cloudflare has made that easy using the Workers AI. In just a few seconds, you can deploy Whisper on fast and powerful Cloudflare network and transcribe audio files. The speech to text conversion will work for many languages and you just have to deploy it in your account. Just keep in mind that it is in beta stage right now, so things may change in the later updates.