LLaVA is an open source ChatGPT alternative with vision capabilities. It is an open source LLM that you can run locally. Or you can also run in Google Colab. It can answer all your questions in a chat like interface just like other LLMs such as Llama 2. But the best part here is that it can also read images. After taking an image from you, it can tell you what it is and then you can further ask questions about that image.

LLMs are getting more advanced these days. Open-source community is releasing different models each day. Recently, this LLaVA was launched on GitHub, and it is very powerful. If you have a capable hardware, then you can run it locally and ask it anything. It can answer your questions related to any subject be it technology, history, or general knowledge. It can be your own personal AI assistant that you can use for free.

In the post below, I will discuss some of its main features and how you can deploy it.

Main Highlights of LLaVA:

Here are the 4 main highlights of this LLaVA model.

- LLaVA is probably he first attempt to use language-only GPT-4 to generate multimodal language-image instruction-following data.

- LLaVA, acronym for Large Language-and-Vision Assistant is an end-to-end trained large multimodal AI model combines a vision encoder and LLM for general-purpose visual and linguistic understanding.

- LLaVA shows exceptional multimodal chat abilities. Sometimes it even exhibits the behavior of multimodal GPT-4 on unseen images.

- The LLaVA Team makes GPT-4 generated visual instruction tuning data, our model and code base publicly available.

How to Install LLaVA for Free on Google Colab?

Even though, you can download and install LLaVa easily by following the instructions given on its GitHub repository. I believe not everybody has the capable hardware to run it smoothly. So, to install it, you can use Google Colab and I will mention later how you can do that.

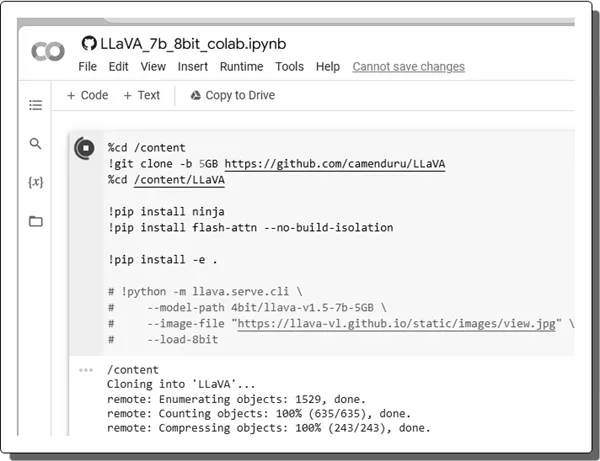

Go to this GitHub page and then click on thew 4th link that is: LLaVA_7b_8bit_colab 7B

It will take you to the Google Colab and you just need to make sure that you are signed in. It will open like this.

Now, you need to run the first cell by clicking on the play button. It will now take some time and will collect and setup the dependencies for you automatically. Be patient while it is doing that.

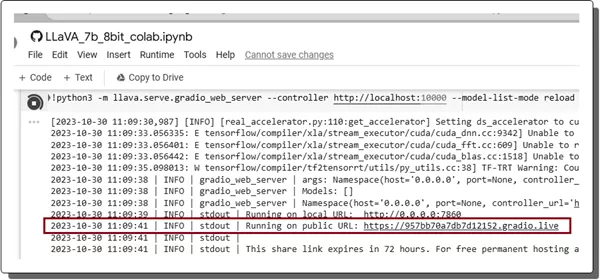

Similarly, run all the cells and wait for the process to finish. When you run the last cell then after a few seconds, you will see the Gradio link.

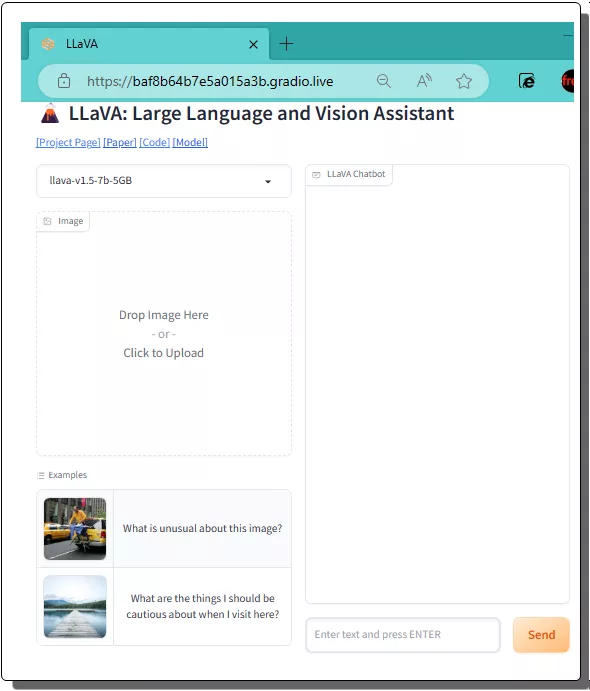

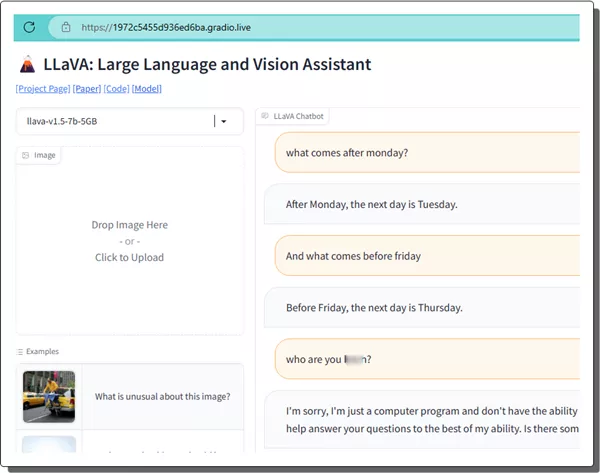

Click on the Gradio link and in the new tab, the web UI if LLaVA will open that looks like this.

Now, at this point, you have successfully run the LLaVA on Google Colab. Just follow the next section to see how to use it to get answers to your questions and read images.

How to use LLaVA LLM like ChatGPT?

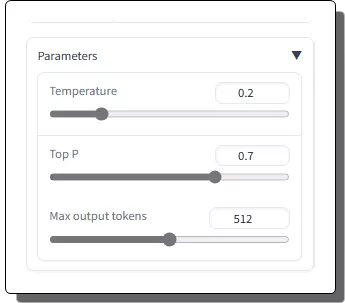

Now, when you have the chat like interface of LLaVA, you just start using it. But before that, I will suggest you configure some parameters first. Expands the parameters section and configure temperature, Top P, max tokens, etc.

Now, you can simply start the chat. You can ask simple as well as complex questions and it will answer them for you. It will automatically understand the context and then will take some time to spit out the answer. You can ask it anything you want.

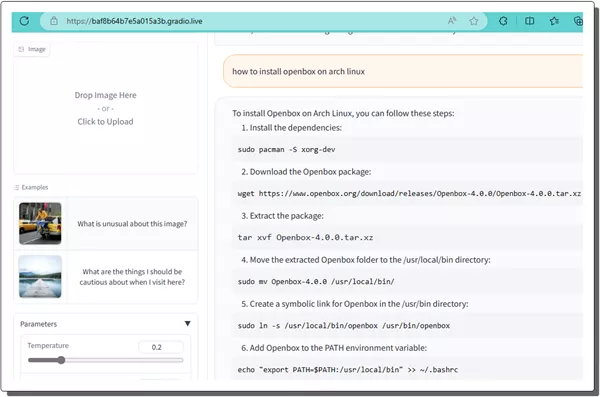

It can also generate long answers. Ask it some sophisticated questions for different subjects. For example, you can ask it the process to install Open box on Arch Linux and it will give you a right answer.

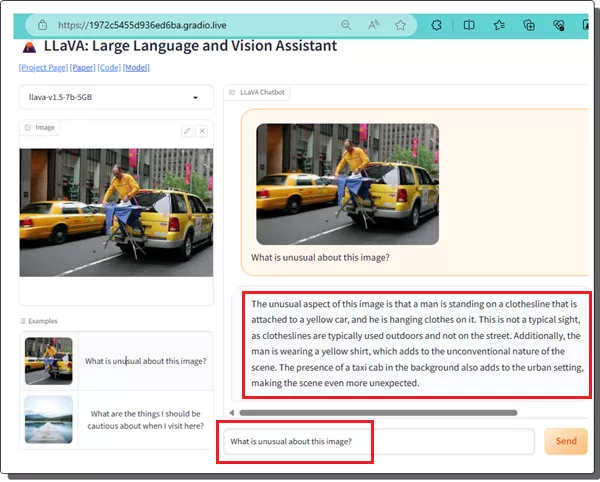

In the similar fashion, you can also upload an image and then enter a prompt. It will analyze both and then will generate the answer accordingly. Its vision is very powerful and is quick to identify objects as well as the overall context in the image.

In this way, you can keep the conversation going for as long as you want. You can clear the chat and then again start the chat with a new topic and question. However, I didn’t see an option in the chat to specify a system role. So, I hope the developer and contributors of this project consider adding that in the next update.

Wrap up…

Are you looking for a personal LLM that you can run easily on your PC or on cloud for free? Then look no more as LLaVA is the just the tool you are looking. No, matter if you are a student or a researcher, this tool will help you. Just try it as I have explained here, and you will be all good to go. If you are interested its source and more technical details, then you can go ahead and check out its GitHub repository.